AIRIS

AIRIS is a powerful approach to model based reinforcement learning.

Through self-driven observation and experimentation, it is able to generate dynamic world models that it uses to formulate its own goals and plans of action. These goals can also be influenced by an outside source, such as a user, to direct AIRIS to the completion of specific tasks.

AIRIS has demonstrated the ability to quickly, effectively, and completely autonomously learn about an environment it is operating in.

Airis is being developed in 3 stage

AIRIS has demonstrated the ability to quickly, effectively, and completely autonomously learn about an environment it is operating in.

Airis is being developed in 3 stage

Stage 1 – Prototype Alpha (Complete)

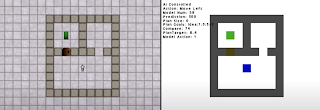

The Prototype Alpha of AIRIS was created using a video game development tool called GameMaker: Studio. This allowed for the rapid development of test environments and ensured that the concept was general enough to not require specialized software or hardware.

This prototype was fed semi-supervised information (sprite types and locations relative to it), from the game engine for its inputs. This allowed for rapid debugging and concept testing, but had the side effect of limiting its overall capabilities. At its peak performance, it was able to complete a 5 step puzzle (collect extinguisher, put out fire, collect key, unlock door, and collect battery) with no initial information about the game. Just by autonomously exploring, observing, and experimenting.

Stage 2 – Prototype Beta (In-progress)

Similar to the Alpha prototype, this version of AIRIS is also being developed in GameMaker: Studio. However, this version uses unsupervised raw pixel data for inputs. This will allow for a much broader variety of testing environments. Such as more complex puzzles, varying world mechanics, and image recognition.

. This prototype is still in development, but its current features include :

- Unsupervised, Online Learning:

It can be placed into an environment and will learn how to solve tasks with no prior training.. Indirect Learning :

It can be trained by observing a human performing the desired task(s). . One Shot Learning :

It requires very little training data to become proficient at tasks.

. Information Incomplete Modelling :

It can operate in environments where only some information about the environment is available at any one time.

- Its knowledge is domain agnostic. This allows knowledge of multiple domains to seamlessly coexist in the same agent.

. Knowledge Merging :

Both intra-domain and multi-domain knowledge can be shared between agents.

Stage 3 – Final Prototype

Once the concepts behind the per-pixel input Prototype Beta have been tested and proven, the final prototype will be written in Python. This will allow further optimization, capability testing, and proving via the numerous and ever growing number of AI benchmarks. Such as the OpenAI Gym and Microsoft’s Project Malmo.

Comments

Post a Comment